Everything You Wanted to Know About 1X’s Latest Video

Just last month, Oslo, Norway-based 1X (formerly Halodi Robotics) announced a massive $100 million Series B, and clearly they’ve been putting the work in. A new video posted last week shows a [insert collective noun for humanoid robots here] of EVE android-ish mobile manipulators doing a wide variety of tasks leveraging end-to-end neural networks (pixels to actions). And best of all, the video seems to be more or less an honest one: a single take, at (appropriately) 1X speed, and full autonomy. But we still had questions! And 1X has answers.

If, like me, you had some very important questions after watching this video, including whether that plant is actually dead and the fate of the weighted companion cube, you’ll want to read this Q&A with Eric Jang, Vice President of Artificial Intelligence at 1X.

IEEE Spectrum: How many takes did it take to get this take?

Eric Jang: About 10 takes that lasted more than a minute; this was our first time doing a video like this, so it was more about learning how to coordinate the film crew and set up the shoot to look impressive.

Did you train your robots specifically on floppy things and transparent things?

Jang: Nope! We train our neural network to pick up all kinds of objects—both rigid and deformable and transparent things. Because we train manipulation end-to-end from pixels, picking up deformables and transparent objects is much easier than a classical grasping pipeline, where you have to figure out the exact geometry of what you are trying to grasp.

What keeps your robots from doing these tasks faster?

Jang: Our robots learn from demonstrations, so they go at exactly the same speed the human teleoperators demonstrate the task at. If we gathered demonstrations where we move faster, so would the robots.

How many weighted companion cubes were harmed in the making of this video?

Jang: At 1X, weighted companion cubes do not have rights.

That’s a very cool method for charging, but it seems a lot more complicated than some kind of drive-on interface directly with the base. Why use manipulation instead?

Jang: You’re right that this isn’t the simplest way to charge the robot, but if we are going to succeed at our mission to build generally capable and reliable robots that can manipulate all kinds of objects, our neural nets have to be able to do this task at the very least. Plus, it reduces costs quite a bit and simplifies the system!

What animal is that blue plush supposed to be?

Jang: It’s an obese shark, I think.

How many different robots are in this video?

Jang: 17? And more that are stationary.

How do you tell the robots apart?

Jang: They have little numbers printed on the base.

Is that plant dead?

Jang: Yes, we put it there because no CGI / 3D rendered video would ever go through the trouble of adding a dead plant.

What sort of existential crisis is the robot at the window having?

Jang: It was supposed to be opening and closing the window repeatedly (good for testing statistical significance).

If one of the robots was actually a human in a helmet and a suit holding grippers and standing on a mobile base, would I be able to tell?

Jang: I was super flattered by this comment on the Youtube video:

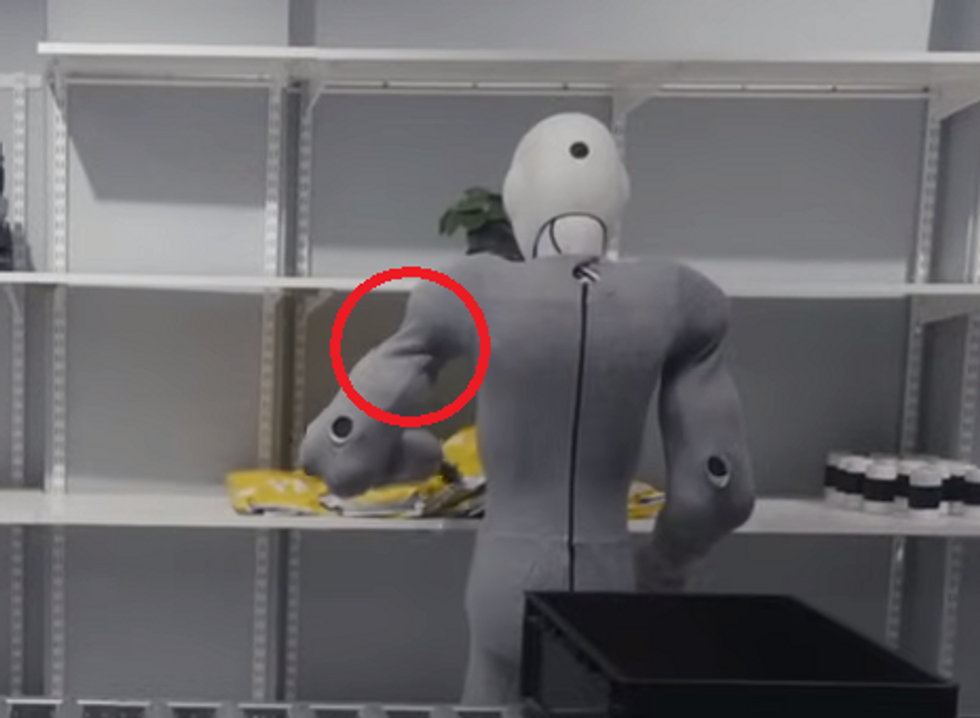

But if you look at the area where the upper arm tapers at the shoulder, it’s too thin for a human to fit inside while still having such broad shoulders:

Why are your robots so happy all the time? Are you planning to do more complex HRI stuff with their faces?

Jang: Yes, more complex HRI stuff is in the pipeline!

Are your robots able to autonomously collaborate with each other?

Jang: Stay tuned!

Is the skew tetromino the most difficult tetromino for robotic manipulation?

Jang: Good catch! Yes, the green one is the worst of them all because there are many valid ways to pinch it with the gripper and lift it up. In robotic learning, if there are multiple ways to pick something up, it can actually confuse the machine learning model. Kind of like asking a car to turn left and right at the same time to avoid a tree.

Everyone else’s robots are making coffee. Can your robots make coffee?

Jang: Yep! We were planning to throw in some coffee making on this video as an easter egg, but the coffee machine broke right before the film shoot and it turns out it’s impossible to get a Keurig K-Slim in Norway via next day shipping.

1X is currently hiring both AI researchers (imitation learning, reinforcement learning, large-scale training, etc) and android operators (!) which actually sounds like a super fun and interesting job. More here.